Blog

Thoughts and Insights

In-Depth Guide to Docker Containers, Architecture, and Compose

What is Docker ?

Docker is an open-source platform designed to help developers build, ship, and run applications efficiently by containerizing them. Containers package an application and all its dependencies (libraries, frameworks, system tools, etc.) into a single, lightweight, and portable unit. This ensures that the application runs consistently across different environments, eliminating issues like version or dependency mismatches.

Key Advantages of Docker:

- Environment Consistency: Applications run exactly the same in development, testing, and production environments without setup hassles.

- Eliminates Version and Dependency Mismatches: Developers don’t have to worry about conflicts arising from different operating systems, library versions, or dependencies.

- Portability: Docker containers can run on any platform that supports Docker, including Linux, Windows, macOS, and cloud environments.

- Efficiency and Speed: Containers are lightweight and start quickly compared to traditional virtual machines, making them highly resource-efficient.

- Isolation: Each container runs in its own isolated environment, ensuring that one application’s issues do not affect others.

- Scalability: Containers are ideal for scaling applications horizontally by simply deploying additional containers.

Execution Context of Docker:

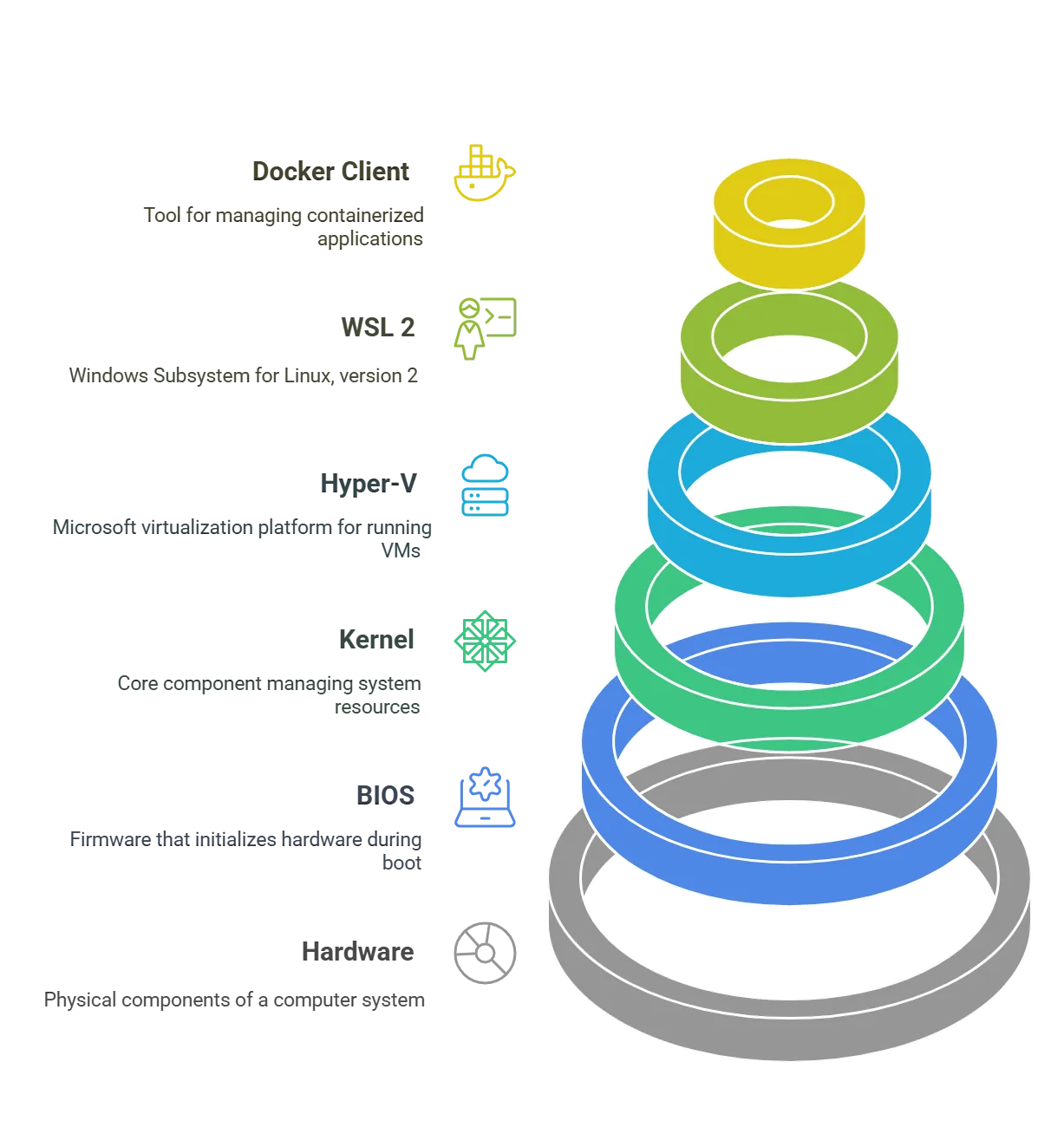

Docker operates by creating and managing containers that run on a host operating system and dynamically utilizes host resources on demand. They don’t run a full guest OS, so they are lightweight and start quickly. Below is a breakdown of its execution context.

- Hardware: The physical machine where everything runs — it provides the foundational resources like CPU, memory, and storage.

- BIOS/UEFI: The firmware that initializes hardware during the boot process and provides a low-level interface between the hardware and the operating system.

- Kernel: The core of the operating system that manages system resources, interacts with hardware, and provides abstractions (e.g., process management, networking). Docker relies heavily on the kernel for features like namespaces and cgroups, which are crucial for containerization.

- Hyper-V (Optional): If you’re running Docker on Windows, Hyper-V may be used to manage virtual machines that host the containerized environment. For example, Docker Desktop on Windows uses Hyper-V to create a lightweight VM if WSL 2 is not configured.

- WSL 2 (Windows Subsystem for Linux 2): On Windows systems, Docker Desktop leverages WSL 2 to run a lightweight Linux kernel inside Windows. This provides near-native Linux performance, as Docker containers natively depend on Linux kernel features.

- Docker Client: The Docker Client is the command-line tool or graphical interface used by developers to interact with the Docker Engine. It sends commands to:

- Docker Daemon: A background service that manages containers, images, networks, and volumes. It communicates with the Linux kernel (via system calls) to create and manage containers.

- Docker Engine: The core Docker runtime that executes commands from the client and handles container orchestration.

Workflow Overview:

- Linux Workflow: On a native Linux machine, Docker directly interacts with the Linux kernel to manage containers, avoiding the overhead of virtualization.

- Windows Workflow: On Windows, Docker uses Hyper-V or WSL 2 as a compatibility layer to emulate the Linux environment required for containers.

Comparison between Docker vs VM (Virtual Machines):

| Feature | Docker | Virtual Machines |

|---|---|---|

| Resource Allocation | Dynamic, on-demand | Dedicated, fixed |

| OS Layer | Shares host kernel | Each VM has its own OS |

| Startup Time | Fast (seconds) | Slow (minutes, due to booting OS) |

| Overhead | Low | High (due to guest OS overhead) |

| Scalability | Highly scalable (lightweight) | Less scalable (heavier) |

| Isolation | Process-level isolation | Full hardware-level isolation |

Architecture of Docker:

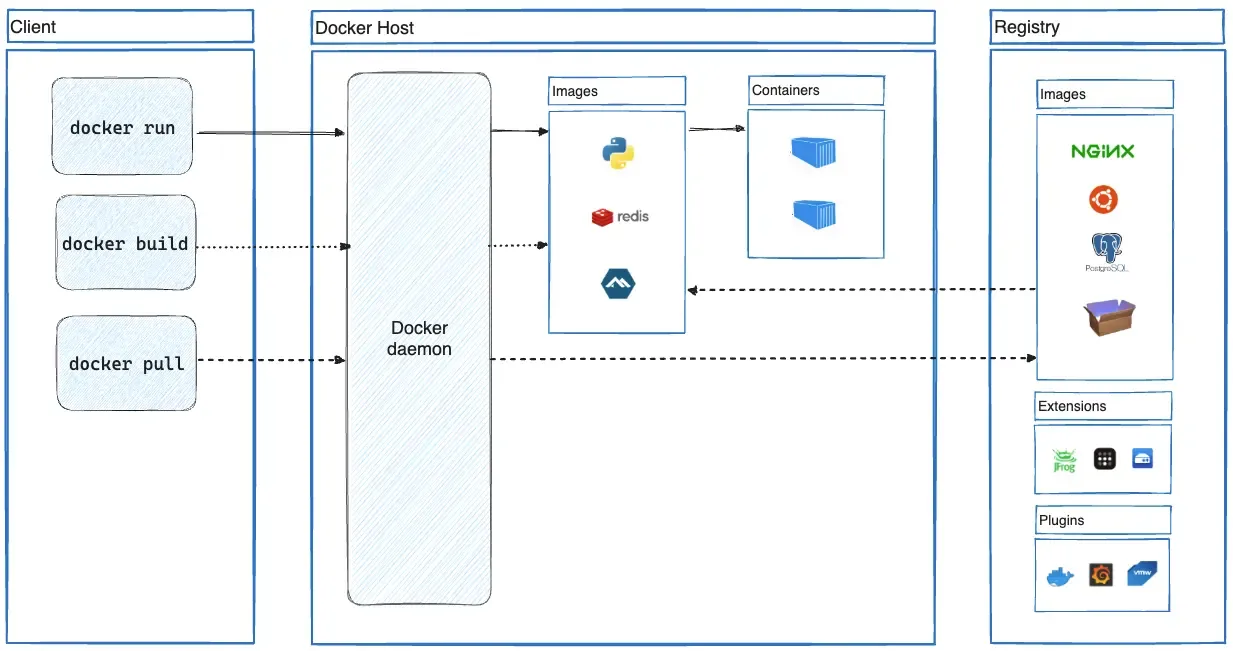

Docker uses a client-server, event-driven architecture that communicates through REST APIs over UNIX sockets or a network interface. The client and daemon (server) can either run on the same system or the Docker client can connect to a remote Docker daemon (server). Below is a breakdown of its architecture.

- Docker Client: The Docker Client is the primary interface for interacting with the Docker Server (daemon) via REST APIs over UNIX sockets or a network. It provides tools like the Command-Line Interface (CLI) and GUI to send commands, such as

docker run, to the Docker Server for managing containers and resources. The Docker Client can be run locally or remotely, offering flexibility in managing Docker environments. - Docker Host: The Docker Host is the physical or virtual machine where Docker is installed. It provides the underlying infrastructure and resources (CPU, memory, storage, etc.) required to run Docker. It wraps two main components:

- Docker Server (daemon): The Docker Server (daemon), is the server-side component of Docker that handles REST APIs requests from the Docker Client and manages Docker objects like images, containers, networks, and volumes. It is responsible for executing operations such as creating, starting, and stopping containers, building images, and managing storage. The daemon can also communicate with other daemons to orchestrate distributed services. For every container it creates, the daemon dynamically generates a unique container ID, ensuring efficient management and execution within Docker’s client-server architecture.

- Docker Object: Docker objects are the entities that are created, managed, and manipulated by the Docker daemon. These objects include:

- Images: A Docker image is a read-only template used to create Docker containers. It contains the instructions to create a container and can be built from a

Dockerfile. Images are often stored in a registry like Docker Hub. - Containers: A container is a running instance of an image. It represents an isolated, executable environment with its own file system, network, and process space. Containers are lightweight and designed to be ephemeral, meaning they can be started, stopped, and removed easily.

- Networks: Docker networks enable communication between containers, the Docker host, and external systems. Containers on the same network can interact with each other, and different types of networks (bridge, host, overlay, etc.) are available depending on the use case.

- Volumes: Volumes are used to persist data generated or used by Docker containers. They are stored outside the container’s file system, allowing for data to persist even when the container is removed or recreated. Volumes are commonly used for database storage or shared data between containers.

- Images: A Docker image is a read-only template used to create Docker containers. It contains the instructions to create a container and can be built from a

- Docker Registry: A Docker registry is a repository where Docker images are stored and shared. Docker Hub is a public registry that anyone can use, and Docker looks for images on Docker Hub by default. even we can run own private registry.

What is Docker Compose?

Docker Compose is a tool for defining and managing multi-container Docker applications using a single configuration file (docker-compose.yml). It simplifies running multiple interconnected containers with a single command.

Key Features:

- Multi-Container Management: Easily manage multiple containers that interact with each other in a single application.

- Declarative Configuration: Define your services, networks, and volumes in a docker-

compose.ymlfile for easy setup and consistency across environments. - Networking: Automatically creates a network for your containers to communicate with each other. Custom networks can also be defined.

- Environment Variables & Secrets: Set environment variables directly in the Compose file to configure your containers (e.g., database passwords, API keys).

- Single Command Operations:

- docker-compose up: Start all containers as defined in the

docker-compose.ymlfile. - docker-compose down: Stop and remove all containers and networks.

- docker-compose logs: View the logs for all running services.

- docker-compose watch: Watch for changes in the

docker-compose.ymlfile and automatically apply them (if supported by the system).

- docker-compose up: Start all containers as defined in the